28-year-old Blaire knows exactly what it's like to be violated online.

As someone who has a public profile online and creates content on the streaming platform Twitch via the name 'QTCinderella', Blaire is inundated with comments from complete strangers every day. And those comments are often sexually inappropriate and uncomfortable to read.

These comments spiked recently when Blaire received a flurry of DMs saying an 'adult video' of her was going viral on a pornographic website. Blaire was confused and immediately distraught. She had never created a video. She knew that for a fact. So what had happened?

Blaire quickly learned she had become a victim of deepfake porn.

Her face had been pasted onto another person's body to make it look as though Blaire was legitimately engaging in a pornographic video. And that video was being seen by thousands of people.

Watch an explanation on what exactly are deepfakes. Post continues below.

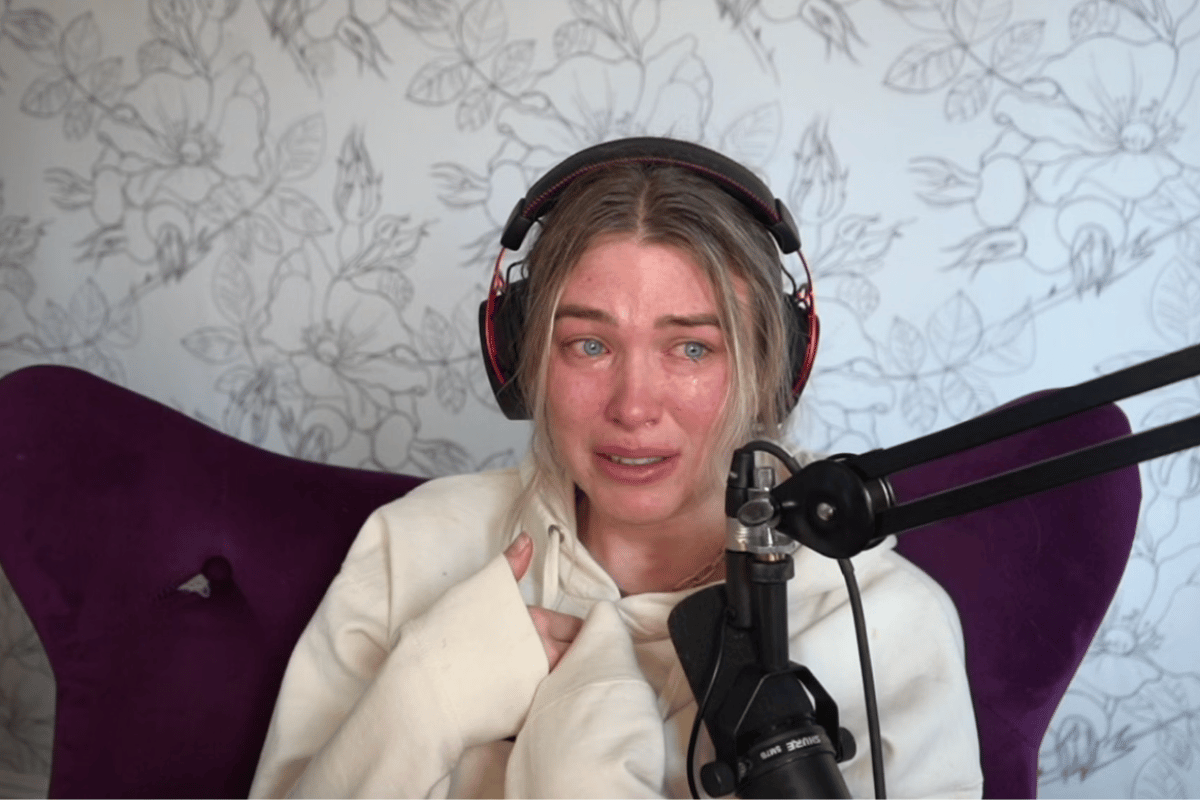

While this is a situation that would make many retreat from online spaces, Blaire decided to instead 'go live' on a Twitch stream.

"I wanted to go live because this is what pain looks like. F**k the f**king internet. F**k the people DM'ing me pictures of myself from that website," she said during a stream, visibly distraught.

Top Comments